After three days dedicated to building sustainable coding habits through reproducible environments, version control workflows, and layered testing, the final part of the S³ School 2026 shifted to what often determines whether research software truly survives beyond a single project: performance awareness, safe deployment practices, and publication readiness. Across Monday to Wednesday, the programme helped participants consolidate an end-to-end view of sustainability, moving from “code that works locally” to software that remains usable, trustworthy, and reusable in real research conditions.

Day 4 — Keeping software usable at scale: profiling, optimisation, and responsible AI support

On Day 4, the school explored the idea that correctness is not enough: software also needs to remain usable under realistic workloads as datasets grow and workflows become more demanding. Participants learned how to approach performance improvement methodically, starting from profiling rather than guesswork. The key lesson was pragmatic: optimisation should not be driven by intuition alone, but by evidence about bottlenecks, ensuring efforts focus on what actually limits performance.

This session also reinforced that performance work is part of sustainability: slow or memory-hungry code can become unusable in shared infrastructures, fragile on laptops, or impossible to rerun in reasonable time. By encouraging participants to treat performance as a measurable aspect of software quality, the training provided a concrete pathway for improving long-term usability without falling into premature optimisation.

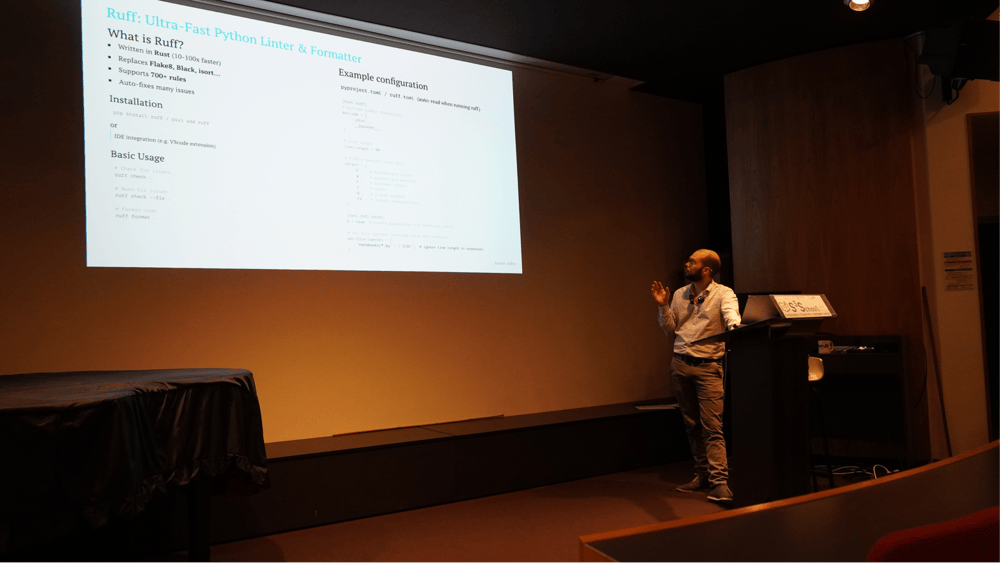

Day 4 also introduced AI-assisted development as an emerging practice that can support research coding, but only when used responsibly. Rather than presenting AI as a replacement for expertise, the school highlighted it as a productivity amplifier for targeted tasks such as clarifying code intent, improving readability, suggesting refactorings, or drafting test cases. At the same time, participants discussed essential safeguards—avoiding secret leakage, not executing untrusted code, and validating outputs through testing—to ensure that AI support strengthens reliability rather than introducing new risks.

Day 5 — Deployment-ready practices: containers, security awareness, and documentation

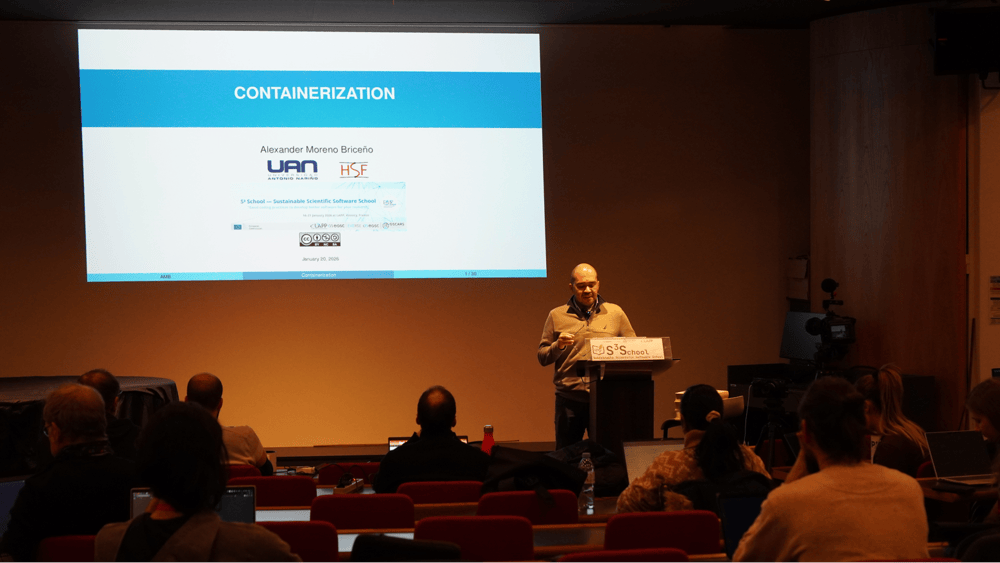

Day 5 brought the sustainability discussion closer to real deployment and reuse scenarios. A first core theme was containerisation, introduced as a practical mechanism to stabilise execution environments across machines and infrastructures. Participants followed an end-to-end workflow, from building an image to running an analysis and producing outputs, illustrating how containers can reduce friction for onboarding and reproducibility. The session also addressed practical realities such as platform differences (native support on Linux and virtualisation layers on other systems) and encouraged clean operational habits for running and removing containers.

The programme then broadened to security awareness for research software, focusing on simple, realistic risk patterns. Participants discussed how dependency ecosystems evolve continuously, why supply-chain risks matter in scientific projects, and how basic safeguards (dependency discipline, safer defaults, and review habits) support trustworthiness over time. In this perspective, security is part of what makes software robust enough to be reused safely beyond its original team.

Documentation was the third key pillar of Day 5 and was framed as a deployment-ready practice: software without documentation is difficult to install, run, validate, or maintain. Participants explored lightweight documentation strategies that scale from small projects to reusable tools, starting from a strong README and minimal runnable examples, and progressively building more structured material as complexity grows. The message was clear: documentation does not need to be extensive from day one, but it must be intentional and synchronised with code evolution if the software is meant to outlive its initial development phase.

Day 6 — Making research software FAIR: publishing for reuse, citation, and long-term preservation

The final day focused on what happens when software leaves the development context and becomes a research output. The school highlighted that sharing a repository is not the same as publishing software: publication requires stable versions, clear licensing, machine-readable metadata, and preservation strategies that support reuse over time. Participants explored practical steps that transform a project into a citable artefact, including semantic versioning practices, citation files, and the role of trusted repositories for long-term accessibility.

Day 6 wrapped with an overview of how FAIRness and openness can be practical enablers of reuse: persistent identifiers allow others to cite exact versions, rich metadata improves discovery, and clear licensing removes legal uncertainty. This final module connected the earlier technical practices to the final goal of making software usable today and also reusable tomorrow, by someone else, without the original developer’s presence.

A shared outcome: sustainability as a sequence of small actions

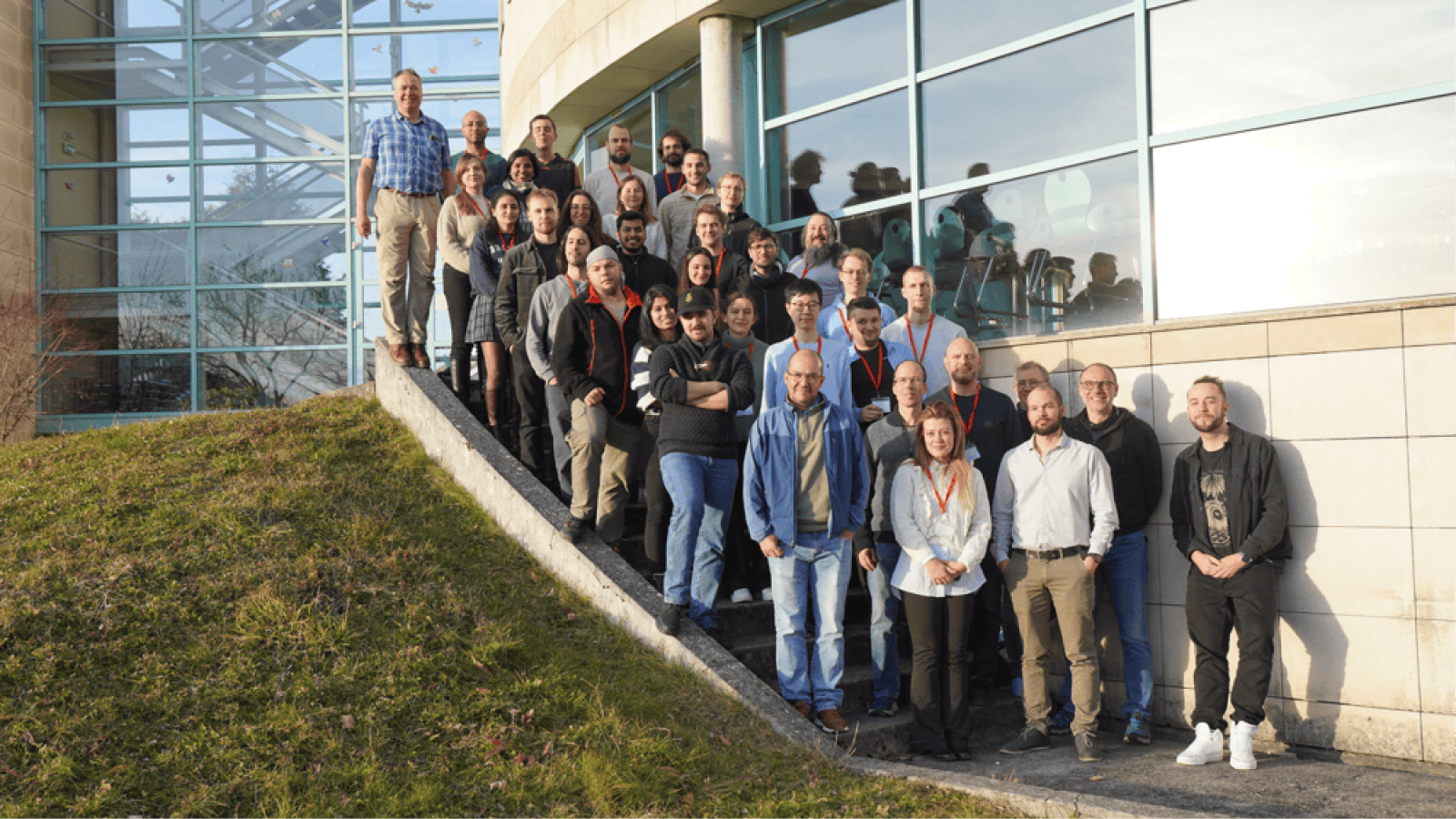

The S³ School – Sustainable Scientific Software School attracted more than 40 participants. From Latvia to Spain, from Particle Physics to Computational Mechanics, early career researchers and software developers learned how a sequence of small, repeatable actions applied consistently can help achieve sustainability.

This final stretch of the programme also strengthened the collaborative work initiated earlier in the week: participants produced a checklist designed to help PhD students and research software developers adopt good practices at the right time with the objective to provide a reusable guide to support research teams in building software that remains robust, citable, and reusable across scientific contexts. The checklist will soon be available on the OSCARS Resources webpage.