This short article is one in a series addressing the impact of the OSCARS project on Open Science practices in Europe. (Reading suggestion: start with the introduction to the series).

Read the article on Zenodo

A shift from isolated research to open, collaborative science - where data, knowledge, results, and tools are shared as early and widely as possible - relies heavily on software, digital tools, and AI. These technologies are essential for making FAIR data a reality and for accelerating knowledge creation and reuse across disciplines. Importantly, they also create the conditions for discoveries across all scientific domains to be translated more quickly into real-world applications, such as clinical practice, climate action, energy innovation, and evidence-based policymaking.

Software provides the foundation to manage, share, and analyse research outputs.

Digital tools for metadata generation, and for the assessment of the implementation of the FAIR principles ensure that research products are findable and reusable, whereas domain-specific platforms allow (sensitive and non-sensitive) data to be handled responsibly and effectively.

Artificial Intelligence enhances discovery by automating data processing and data cleansing, as well as scientific analysis, anomaly detection and pattern recognition. These AI-enabled capabilities shorten the path from raw data to actionable insights, whether for personalised medicine, societal decision-making, sustainable environmental practices, or advanced materials development.

Beyond technical performance, there is a growing awareness that AI and digital tools must be developed and deployed responsibly. Issues of transparency, bias mitigation, energy efficiency, and long-term software sustainability are becoming central to the Open Science agenda. Addressing these challenges ensures that AI contributes not only to accelerating science but also to trustworthy and environmentally responsible research practices, facilitating uptake across domains.

As stated in the European Open Science Cloud (EOSC) SRIA investing in software, AI and tools is indispensable to build a “web of FAIR data”. In fact, they are the very foundation of a federated EOSC, as they enable discovery, access, reuse, and reproduction of scientific resources. Currently, further training and promotion is needed, to drive a cultural change in the broad research community if it is to adopt FAIR and Open Science practices. This cultural change is also a translational one: equipping researchers from all disciplines with the skills to move from data stewardship to delivering tangible benefits for patients and citizens.

OSCARS and the Science Clusters’ contribution to software, AI and tools for Open Science.

OSCARS, building on the tools developed in previous H2020 cluster projects, emphasises the consolidation, reuse, and composability of software across RIs.

With this main goal, the five Science Clusters (SCs) – ENVRI, ESCAPE, LS-RI, PaNOSC, and SSHOC – have been developing interoperable platforms, reusable workflows, and domain-specific services that support FAIR data, enable AI-driven research, and foster cross-disciplinary composability. They are working towards their integration into EOSC to enhance accessibility, reproducibility, and collaboration across scientific domains.

In this respect, all Science Clusters have been working in the frame of OSCARS, on identifying and further developing and consolidating Composable Open Data and Analysis Services (CODAS) – modular, interoperable software environments that allow for the flexible composition of research workflows. The clusters aim to integrate software into the EOSC to make it discoverable and reusable across domains, also thanks to a shared software catalogue, including a variety of domain-specific tools.

Thus OSCARS, through the clusters encompassed, delivers a comprehensive tools ecosystem that supports the entire research data lifecycle – including metadata generation, access control, analysis, visualisation, and archiving. The development of tools and platforms is aligned with EOSC and FAIR standards, ensuring compatibility with the future Common European Data Space and related Open Science frameworks.

To empower researchers, OSCARS encourages the uptake of those resources through its Open Call for Open Science projects and services.

Both through the cluster agendas and its funded projects, OSCARS promotes reproducible and FAIR AI training environments, using workflow engines (e.g. Galaxy, Jupyter Notebooks) and metadata standards (e.g. RO-Crate), while addressing ethical and regulatory dimensions of AI use, especially in SSH and Life Sciences (e.g. governance of sensitive data, human-AI interaction).

Moreover, to enhance sustainability of the software and tools made available to the community, OSCARS has planned to set up a “skills and competences” registry in cooperation with other communities (e.g. Zenodo). This will help researchers find experts, training and software resources.

One of OSCARS’s unique strengths is its inter-cluster cross-pollination. Software and tools developed in one domain are evaluated and adapted for others. Common tools are shared and improved across clusters, and cross-cluster technical working groups foster harmonisation and accelerate the adoption of best practices. Another meaningful aspect is the role of community-driven software development. By engaging researchers directly in the design, testing, and evolution of tools, OSCARS and the clusters promote a culture of shared ownership and continuous improvement that can serve as a backbone for Open Science communities. This participatory approach also increases trust and adoption, since tools reflect real-world needs across disciplines.

Below is an outline of the key contributions from each of the Science Clusters to the area of software, AI and tools.

ENVRI (Environmental Sciences)

In the ENVRI Science Cluster, the ENVRI-Hub – a platform developed to integrate environmental RIs and facilitate access to environmental data and services for interdisciplinary research – offers harmonised access to subdomain-specific data, software, tools and services, including cloud-based data analytics frameworks and Jupyter/Galaxy-based science demonstrators that integrate live code and visualisation. Among other tasks, the ENVRI Competence Centre (CC) will evaluate open-source tools for adoption by the community.

ESCAPE (Astrophysics, Nuclear & Particle Physics)

The ESCAPE Science Cluster maintains a software catalogue, which is continuously expanded with software, workflows, and methods developed within the cluster. AI tools are deployed for data management, exemplified by the Data Lake model and the DIOS - Data Infrastructure for Open Science, which support exabyte-scale FAIR data with orchestrated access. Moreover, VREs have been integrated with test science projects and community-driven services.

LS-RI (Life Sciences)

The LS-RI Science Cluster provides a suite of software and tools, including WorkflowHub [1], Galaxy [2] and RO-Crate [3], sensitive data toolboxes, and FAIR metadata schemas.

AI-based tools are used for instance for protein design, clinical diagnosis or drug development. These applications have a direct translational impact as they accelerate the path from bench discoveries to bedside applications, supporting faster diagnostics, more efficient drug pipelines, and personalised healthcare approaches. They support the analysis of interdisciplinary, reproducible workflows and enhance interoperability for sensitive biomedical data.

The acceleration of AI in LS is also fuelled by the expanding availability of large datasets. Ensuring these datasets are of high quality and are enriched with appropriate metadata is not only a technical necessity but also a translational one, since the reliability of downstream applications such as clinical trials, digital health technologies, or regulatory submissions depends on trustworthy, well-annotated data.

LS-RI also promotes software composability by offering reusable tools and employing containerisation technologies, such as conda and bioconda. Data enrichment tools and environments (e.g. VRE) are continuously connecting new types of data(sets), giving more and more potential to AI. These capabilities do not just serve data scientists, they also help translational researchers qualify and validate LS data, ensuring that computational outputs can inform real-world decision-making in healthcare and LS policy.

PaNOSC (Photon and Neutron Science)

The PaNOSC Science Cluster maintains a shared software packaging system based on CernVM File System - CVMFS [4] and supports the ongoing development of its VRE for data analysis, VISA – Virtual Infrastructure for Scientific Analysis. To enhance automation, it has extended H5Web – a web-based viewer for HDF5 files – for large-scale interactive data visualisation, and integrated tools such as Galaxy and RO-Crate. Its ultimate goal is to build a federated search API and data portal for FAIR data across European PaN facilities.

SSHOC (Social Sciences & Humanities)

The SSH Open Science Cluster operates the SSH Open Marketplace – a discovery platform for tools, services, and training materials – and supports the integration of SSH-specific workflows into common analysis environments, such as Jupyter Notebooks. Tools such as AI-based modelling pipelines and metadata crosswalks have been developed to enhance data interoperability across disciplines. SSHOC also promotes compliance with the GDPR regulations and the AI Act , helping to embed FAIR and CARE data practices within SSHOC and across clusters. This expertise fosters sharing of methodological, ethical insights with other clusters, enriching cross-disciplinary collaboration.

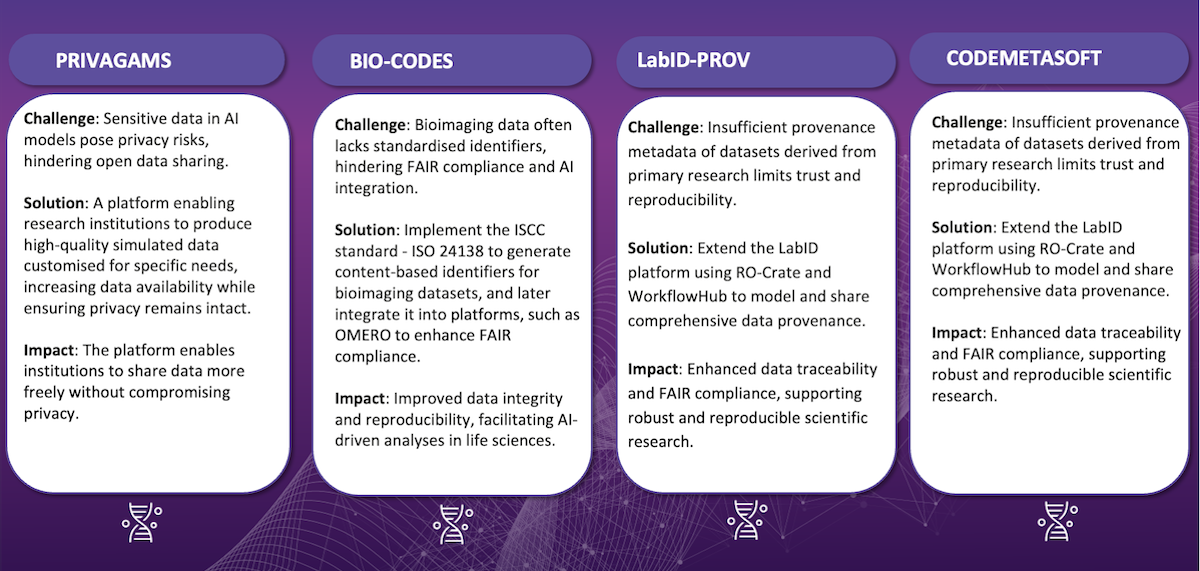

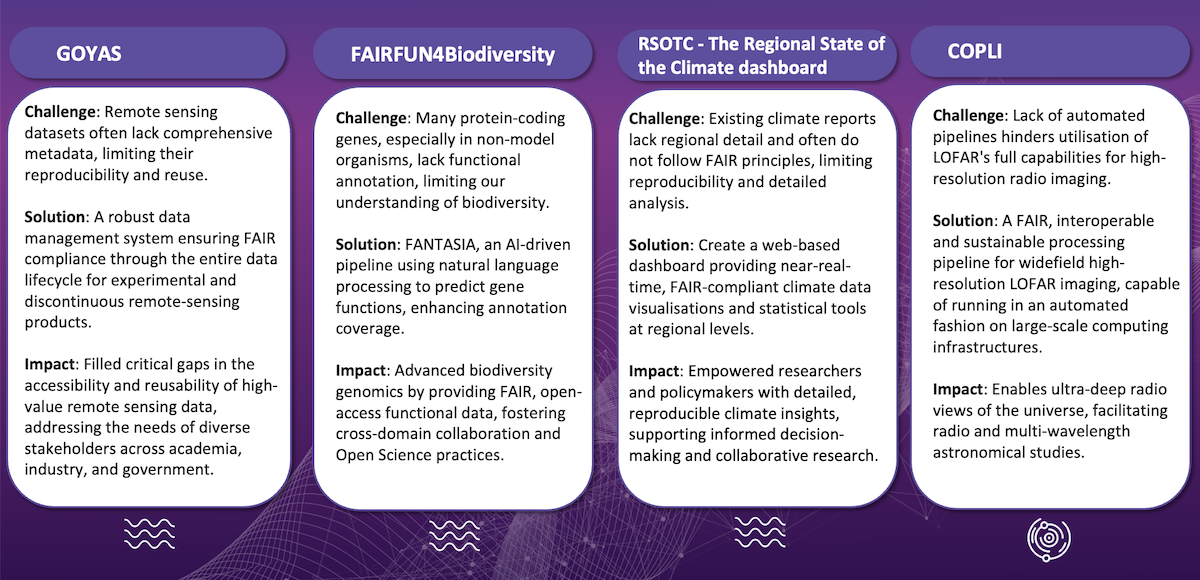

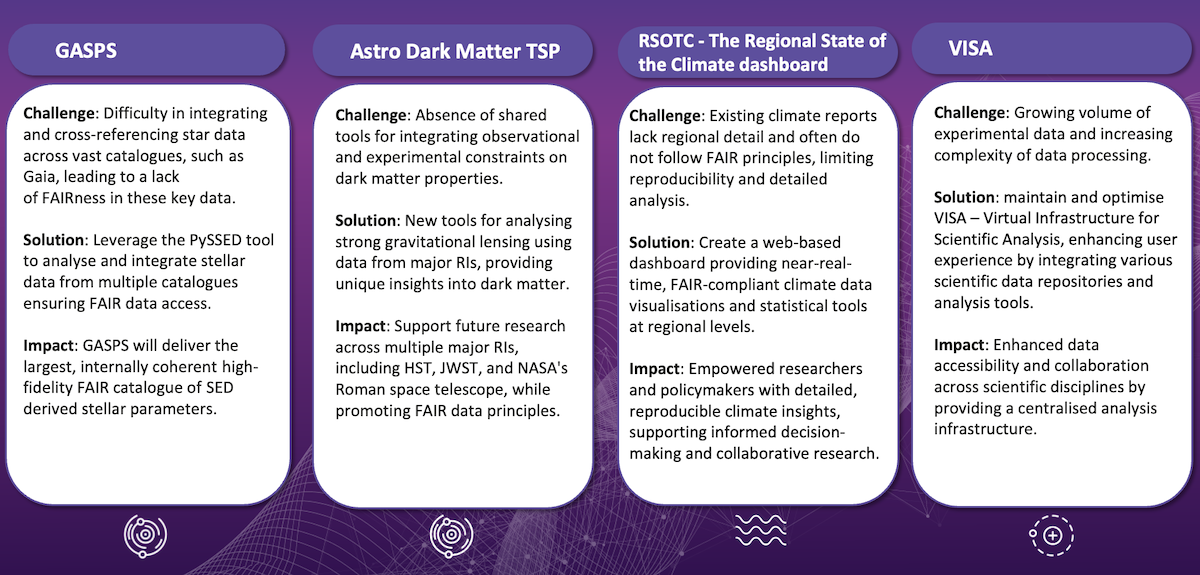

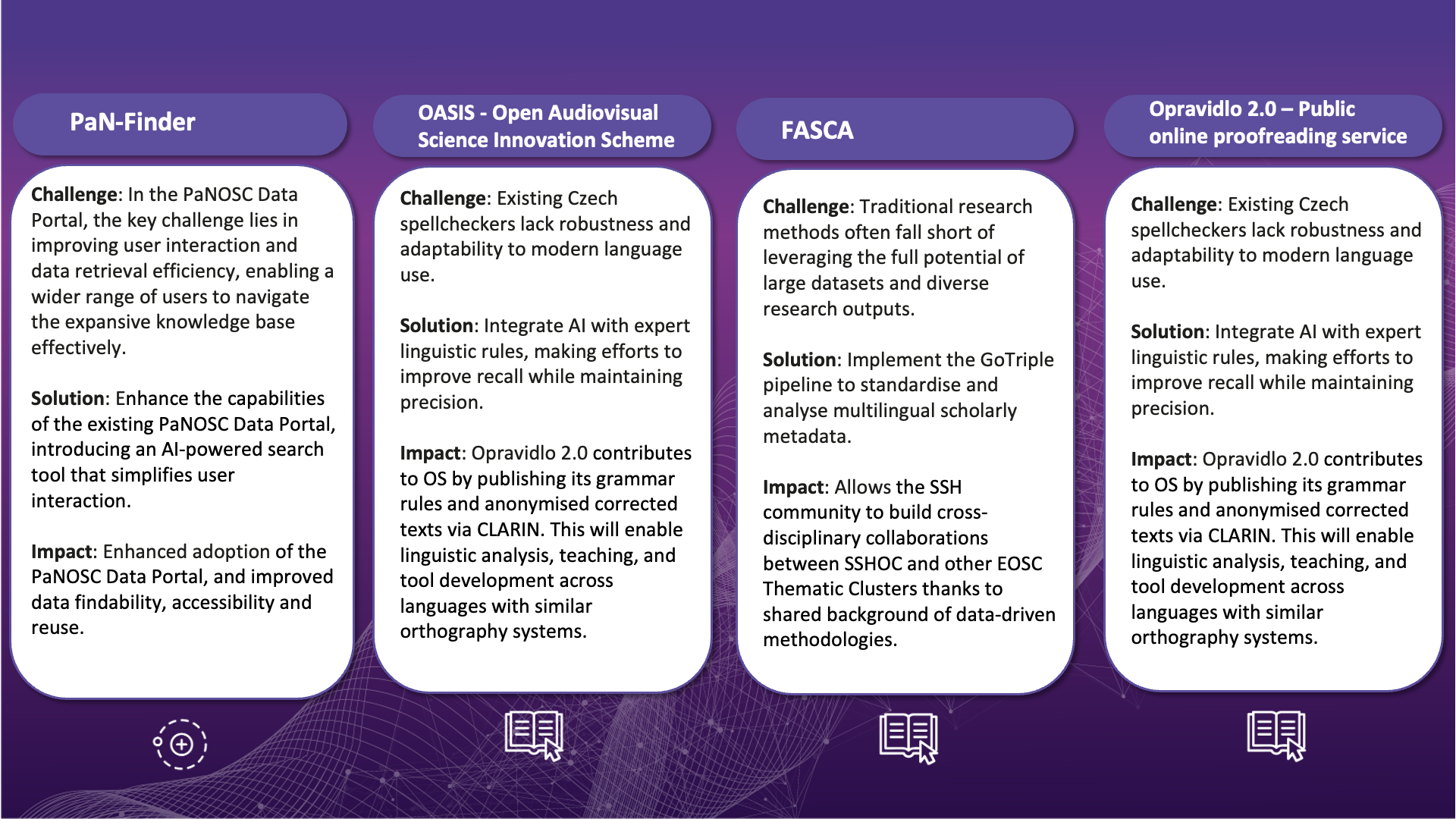

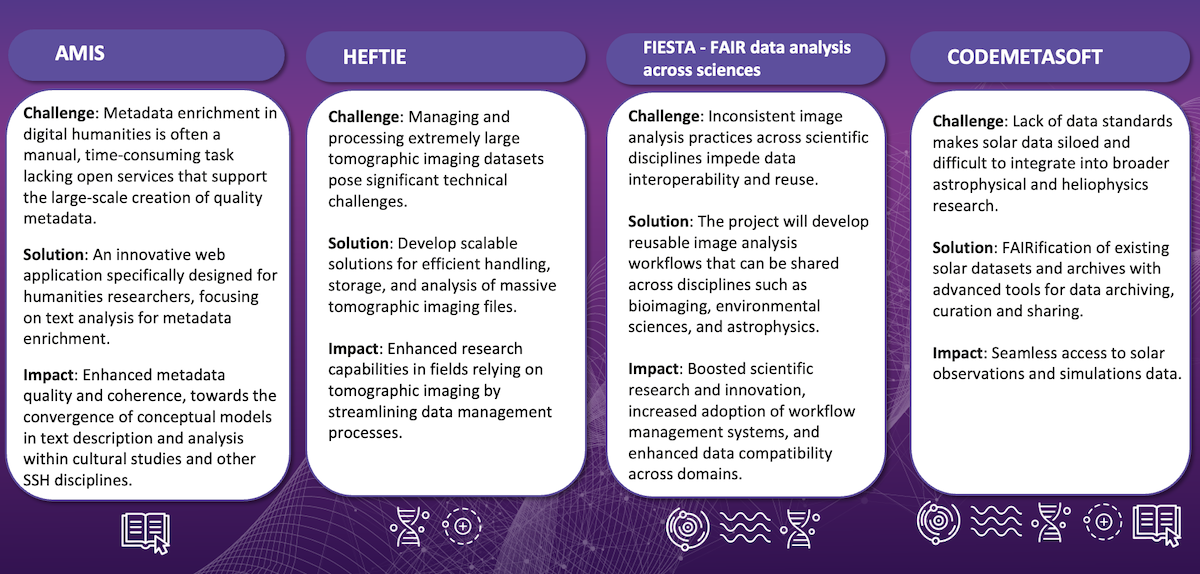

OSCARS funded projects addressing the thematic area of Software, AI and Tools for Open Science

Below is an overview of the OSCARS funded projects working to make improvements primarily in these areas:

Browse all OSCARS funded projects here

Useful resources

AI4EOSC - An EU project focused on creating platforms and tools for development, deployment, and sharing of AI/ML/DL models in EOSC under FAIR and Open Science norms.

Research Software Quality Toolkit (RSQT) - A toolkit for assessing excellence, sustainability, and FAIRness of research software; helpful for anyone involved in software development.

FAIR-IMPACT - Horizon Europe project, which identifies and supports tools, practices, technical specifications, and policies to expand FAIR solutions across EOSC in multiple domains, including software as a research output.

FAIR for Research Software Principles (FAIR4RS) — Principles tailored to treating research software as first-class within the FAIR ecosystem.

EOSC-Life WP4 Toolbox: Toolbox for sharing of sensitive data — A concept description of tools and practices for sharing sensitive biomedical data securely in EOSC.

Materials Cloud — Platform for computational science that provides not only open datasets but also tools, workflows, provenance tracking, reproducibility etc., illustrating FAIR in practice.

KU Leuven: FAIR research software guidance — Practical guidelines and checklists for research software to comply with FAIR principles (licensing, version control, metadata, environment capture etc.).

FAIR Practices in Artificial Intelligence — Recommendations and examples for applying FAIR to AI models (metadata, provenance, interoperability) from Europe-focused workshops and communities.

Authors

Nicoletta Carboni (CERIC-ERIC), Romain David (ERINHA), Franciska de Jong (Utrecht University), Jonathan Ewbank (ERINHA), Darja Fišer (CLARIN ERIC), Nektarios Liaskos (EATRIS), Justine Thomas (CNRS-LAPP), Friederike Schmidt-Tremmel (Trust-IT).

DOI

http://doi.org/10.5281/zenodo.17225296

Footnotes

[1] WorkflowHub: a registry for computational workflows.

[2] Galaxy is an open, web-based platform designed to make computational research FAIR, promoting Open Science practices.

[3] RO-Crate is a metadata packaging format for describing research objects in a machine-actionable way.

[4] CVMFS – CernVM File System, a read-only file system designed for delivering software and data to large-scale computing environments.

[5] Hierarchical Data Format (HDF) is a set of file formats (HDF4, HDF5) designed to store and organize large amounts of data.